When novelty fades, expectations grow

There was a brief moment when artificial intelligence felt electric. A prompt went in, a paragraph or picture came out, and the gap between imagination and output collapsed in real time. That novelty didn’t last. As our collective attention span thinned, the thrill of “instant creation” quietly transformed into impatience. The same tools that once amazed us are now judged by milliseconds.

This shift matters, because the physical world has to bend to our psychological one. As expectations rise, the infrastructure underneath AI has to stretch… often to uncomfortable limits. What we call “datacenters” today aren’t really warehouses for information anymore. They are factories built to satisfy boredom.

From Data Storage to Processing Factories

Traditional datacenters were designed to hold data: files, backups, websites. AI flipped that model. Large language models, image generators, and video systems don’t just retrieve information—they continuously compute.

Calling these facilities “datacenters” undersells what they’ve become.

What changed?

- Inference-heavy workloads: Every prompt requires real-time mathematical computation.

- Parallel processing: Thousands of GPUs working simultaneously on a single request.

- Latency sensitivity: A delay of seconds now feels like failure, not inconvenience.

These buildings function more like industrial processing plants than libraries. Electricity flows in, heat pours out, and answers emerge.

Why Models Feel Slower as They Improve

There’s a paradox at play. Models are undeniably better than they were a few years ago, yet many users feel they’re slower. That’s not because the technology regressed, it’s because demand escalated.

Expectation vs. Reality in AI Performance

| Era of AI Use | Typical User Expectation | Infrastructure Load |

|---|---|---|

| Early demos | “Anything at all” | Low, bursty usage |

| Public launch | Fast text & images | Moderate concurrency |

| Today | Long-form text, images, video | Extreme parallel demand |

As expectations shift from novelty to utility, infrastructure strain grows faster than perceived performance gains.

We’re asking more complex questions, generating longer outputs, and stacking multimodal requests, all while expecting instant feedback. The system isn’t slower… we’re just really f’n needy.

Daisy-Chaining the Planet

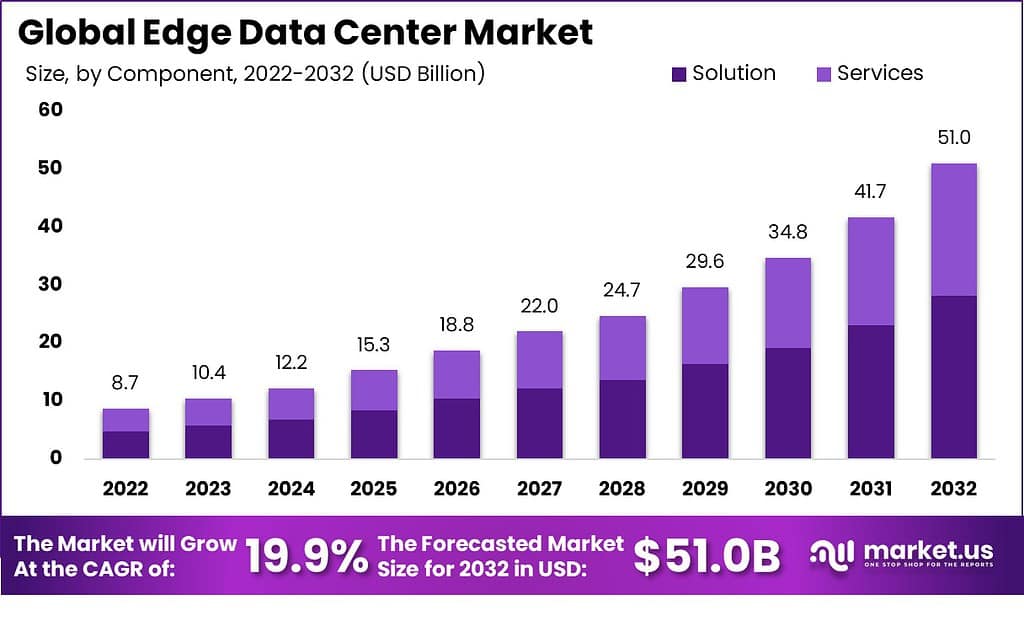

Unless hardware efficiency leaps forward at the same pace as model complexity, there’s only one option: scale outward.

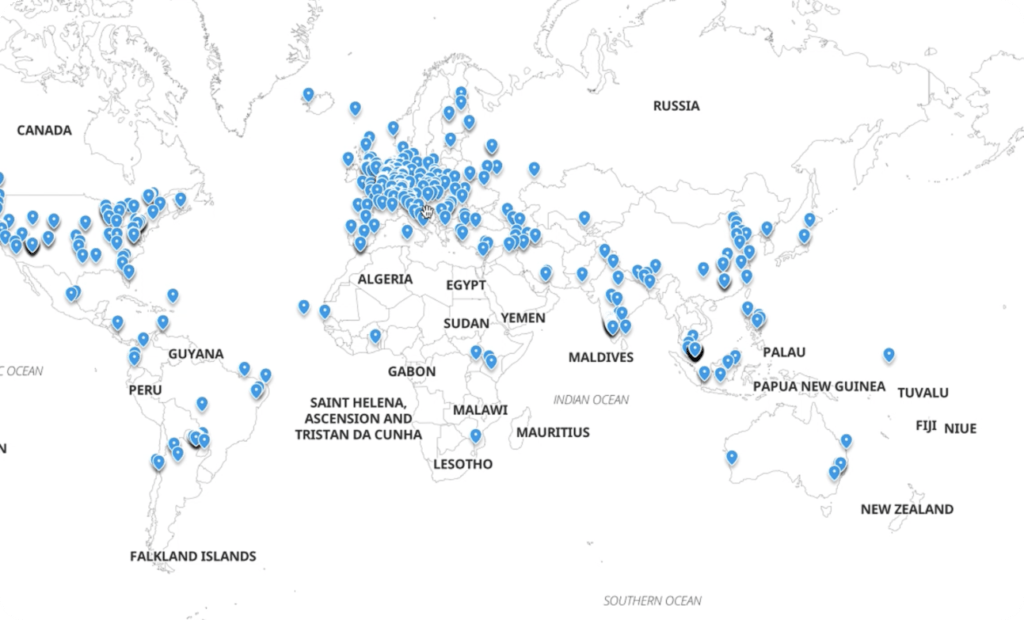

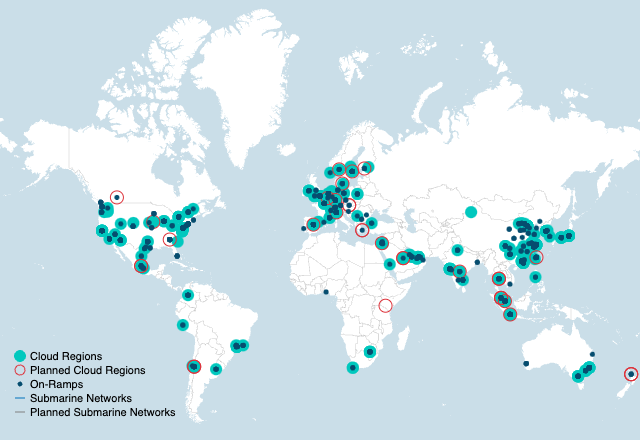

That’s why processing centers are multiplying across continents. Workloads are distributed, routed, cached, and re-routed, often mid-response, to keep latency within acceptable bounds. The globe itself becomes part of the machine.

This isn’t redundancy for safety alone… it’s redundancy for satisfaction.

Video Changes Everything

The next pressure wave is already visible. With OpenAI advancing tools like Sora, AI moves from text and images into continuous motion. Video generation requires significantly more sustained compute than text or images.

Relative Compute Demand by Media Type

Text Generation: █

Image Generation: ███

Video Generation: ██████████

Each second of generated video requires:

- Temporal consistency across frames

- Higher memory bandwidth

- Longer sustained GPU usage

Once video becomes commonplace, today’s processing centers will feel undersized overnight.

Boredom as an Infrastructure Driver

The uncomfortable truth is this: these facilities aren’t built because we need more answers. They’re built because waiting feels intolerable. As soon as AI becomes normal, patience evaporates.

Datacenters, or, processing centers, are monuments to that impatience. Concrete, steel, silicon, and power, assembled not for curiosity but for immediacy.

The question isn’t whether more will be built. It’s whether our expectations will ever slow down enough to let them catch up.

Additional Resources

OpenAI Research Blog – Updates on large-scale model development and infrastructure challenges.

Uptime Institute – Analysis on modern data center design, resilience, and efficiency.

IEEE Spectrum: AI Hardware – In-depth reporting on GPUs, accelerators, and future compute architectures.

MIT Technology Review – Artificial Intelligence – Coverage on how AI reshapes global computing systems.